Go contains a scanner package that can be used to tokenize any source code written in Go. This post will provide an outline of how to use the scanner package in Go.

What is tokenization?

Tokenization is the method a source code is parsed to different tokens or identifiers. It is an important step used in compilation. The generated tokens are then fed to a parser to parse the expression.

Required imports

To use tokenizer we need to import the scanner package. We also need the token package for the EOF token.

import "go/scanner"

import "go/token"

Tokenization using the scanner

To tokenize a source string we first declare a scanner type available from the package. Then we repeatedly scan each line and tokenize it.

package main

import (

"fmt"

"go/scanner"

"go/token"

)

func main() {

// src is the input that we want to tokenize.

src := []byte("func main(){}")

// Initialize the scanner.

var s scanner.Scanner

fset := token.NewFileSet() // positions are relative to fset

// register input "file"

file := fset.AddFile("", fset.Base(), len(src))

s.Init(file, src, nil /* no error handler */, scanner.ScanComments)

for {

pos, tok, lit := s.Scan()

if tok == token.EOF {

break

}

fmt.Printf("%s\t%s\t%q\n", fset.Position(pos), tok, lit)

}

}

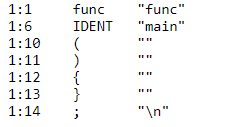

As can be seen, the func keyword is detected and the main is detected as an identifier. The other brackets, as well as a new line is found too.

The Go scanner tokenizes the input file according to the language rules. It is a pretty important package provided to users who want to do some low-level programming with Go.