Good morning, Gophers. In working with Golang, if you’ve ever found yourself frustrated when downloading files from the internet, saving them individually to a directory, and then opening that file in Go code, then you’ve come to the right place. Today I am going to give you all the secrets on downloading files in Go from the internet directly into the same directory as your Go files, so let’s get on with it.

Go Download the File using the net/http package

We can download a file using net/http as follows:

package main

import (

"fmt"

"io"

"log"

"net/http"

"net/url"

"os"

"strings"

)

var (

fileName string

fullURLFile string

)

func main() {

fullURLFile = "put_your_url_here"

// Build fileName from fullPath

fileURL, err := url.Parse(fullURLFile)

if err != nil {

log.Fatal(err)

}

path := fileURL.Path

segments := strings.Split(path, "/")

fileName = segments[len(segments)-1]

// Create blank file

file, err := os.Create(fileName)

if err != nil {

log.Fatal(err)

}

client := http.Client{

CheckRedirect: func(r *http.Request, via []*http.Request) error {

r.URL.Opaque = r.URL.Path

return nil

},

}

// Put content on file

resp, err := client.Get(fullURLFile)

if err != nil {

log.Fatal(err)

}

defer resp.Body.Close()

size, err := io.Copy(file, resp.Body)

defer file.Close()

fmt.Printf("Downloaded a file %s with size %d", fileName, size)

}

The steps are easy to understand if you’ve followed the previous tutorials:

- We define a Client in net/http

- We use url.Parse to parse the file path itself and split it to take the last part of the URL as the filename

- An empty file must be created first using os.Create, and we handle errors

- Finally, we use io.Copy to put the contents of URL into the empty

Using grab package

The other option if you don’t want to code the http Client yourself is to use a third-party package, and there’s one that’s relatively easy to use by Ryan Armstrong. grab is a download manager on Golang, and it has progress bars and good formatting options:

go get github.com/cavaliercoder/grab

For example, the following code will download the popular book “An Introduction to Programming in Go”.

import "github.com/cavaliercoder/grab"

resp, err := grab.Get(".", "http://www.golang-book.com/public/pdf/gobook.pdf")

if err != nil {

log.Fatal(err)

}

fmt.Println("Download saved to", resp.Filename)

The grab package has been created incorporating Go channels, so it really shines in concurrent downloads of thousands of files from remote file repositories.

Downloading Bulk Files

If you are downloading bulk data from a website, there is a high chance that they will block you. In that case, you can use the VPN as a proxy to avoid blocking of your requests.

Other Options to download files – wget and curl

I promised to give you all the options, and it would be really unfair if I don’t go over there. So this is a section for downloading a file from your terminal. As you may be aware, no matter what editor you’re coding on, you can bring up a terminal:

- VSCode – Ctrl + Shift + P

- Atom – Ctrl + `

And this is an easy method for beginners, as you can download files from a URL directly into your directory.

The first is wget. This is a fantastic tool for downloading ANY large file through an URL:

wget "your_url"

The main reason I use wget is that it has a lot of useful features like the recursive downloading of a website. So you could simply do:

wget -r "websiteURL"

and it will download 5 levels of the website. You can select the number of levels using -l flag.

wget -r -l# "websiteURL"

Replace the # with any number, or you can use 0 to infinitely loop through and download a whole website.

Also, the links on the web pages downloaded still point to the original. We can even modify these links to point to local files as we’re downloading them using -k.

wget -r -k "websiteURL"

But there’s more! You don’t have to use so many flags. You can just mirror the entire website using -m, and the -r, -k, and -l flags will be automated.

wget -m "websiteURL"

Do you want more, because THERE IS MORE!

You can make a .txt file with the links of all the sites you want to download, save it and just run:

wget -i /

to download all of them.

If multiple files have the website name in common, you don’t even have to put the URL name in the file. Just list the files as so:

---file.txt---

file1.zip

file2.zip

file3.zip

.

.

.

and then you can simply run:

wget -B http://www.website.com -i /

Also, some websites will see that you’re downloading a lot of files and it can strain their server, so they will throw an http-404 error. We can get around this with:

wget --random-wait -i /

to simulate a human downloading frequency.

In all, wget is unsurpassed as a command-line download manager.

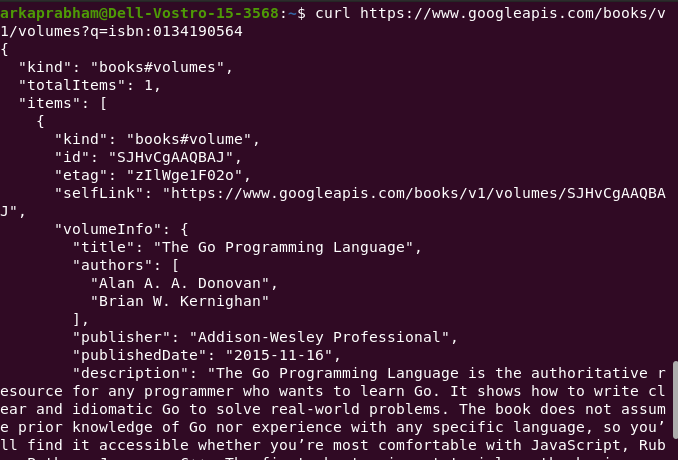

Next, we move on to curl. While another CLI download tool, it is different than wget.

It lets you interact with remote systems by making requests to those systems and retrieving and displaying their responses to you. This can be files, images, etc. but it can also be an API call. curl supports over 20 protocols, including HTTP, HTTPS, SCP, SFTP, and FTP. And arguably, due to its superior handling of Linux pipes, curl can be more easily integrated with other commands and scripts.

If we wget a website, it outputs a .html file, whereas if we do:

curl "URL"

then it will dump the output in the terminal window. We have to redirect it to an HTML:

curl "URL" > url.html

curl also supports resuming downloads.

curl supports a few interesting things like we can retrieve the header metadata:

curl -I www.journaldev.com

We can also download a list of URLs:

xargs -n 1 curl -O < filename.txt

which passes the URLs one by one to curl.

We can make API calls:

Ending Notes

For tar and zip files, I find it more useful to simply use the wget command, especially since I can pair it with the tar -xzvf commands. However, for image files, the net/http package is very capable. However, there is more community involvement going on at present to develop a more robust, concurrent download package.