Today, we will be discussing content delivery networks (CDNs). A CDN is a distributed network of edge servers that facilitates the delivery of web content to users across the globe.

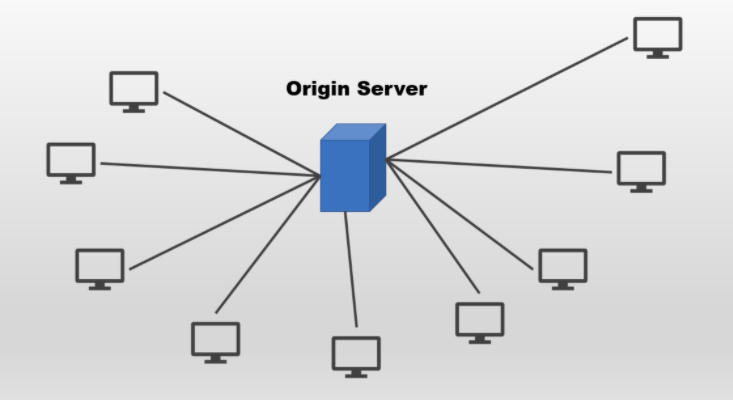

Traditionally, most companies rely on a single origin server and scale it horizontally to support their user base.

Such structures can cause additional packet losses, delays, and jitters for users located far away from the origin server. This can become a big issue if multiple round-trips are required to generate the complete web content. Moreover, an increase in loading time often drives customers away, resulting in a lower conversion rate.

Content Delivery Network

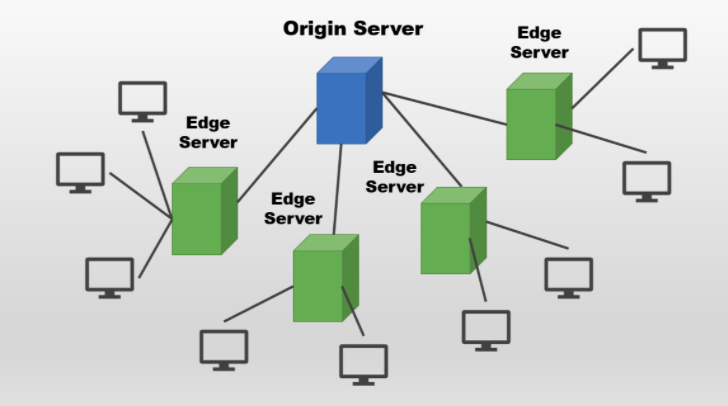

The CDN architecture was conceptualized to make content closer to the users regardless of their location.

The basic concept behind CDN is that multiple physical nodes are distributed all over the world, providing high-bandwidth cached content to the nearest users. These nodes are known as points of presence (POP). As for dynamic content, most CDN providers utilize some form of network and route optimization to re-route traffic to the nearest POP automatically.

In addition, CDN providers also utilize some forms of image/video optimization or compression to serve huge volumes of media-related content. This is why the video that you upload to YouTube defaults to 360p in quality initially. It takes time to process and distribute the content to all the edge servers. Then, it is cache as serve to users based on their location and bandwidth. Higher quality videos typically take a few hours to be served at the same resolution to users.

The main purposes for using CDN are to:

- decrease the load time and latency

- prevent overload of servers and ensure better reliability

- improve security and mitigate any DDoS attacks

The following image outlines how CDNs work.

Decrease the Load Time and Latency

Instead of serving the users directly, a CDN architecture distributes the content to multiple edge servers located strategically in various places. Then, content is served to users based on their location. This significantly reduces the load time and latency as the content comes from the nearest server.

Prevent Overload of Server

Edge servers are capable of caching the same content before serving it back to users. When a user requests for specific web content, each edge server checks if they have the file in the edge before requesting it from the origin server.

The file usually remains as cache in the edge server until the underlying time-to-live (TTL) expires. The usual expiration time is about seven days.

Edge servers reuse the same cache over and over again to serve multiple users that request the same file. This significantly reduces the load on the origin server, providing a better user experience.

Better Security

Since users access the nearest edge server instead of the origin server, each individual edge server acts as a layer that can mitigate DDoS attacks. This protects the origin server from being taken down or accessed by malicious people.

CDN Outages

However, CDN providers are also prone to disruptions and outages. For example, there was a massive outage on 8 June 2021, which took out many major web and online services. The most notable companies affected were:

- Hulu

- CNN

- The New York Times

- The Guardian

- Twitch

- Spotify

- Vimeo

- HBO Max

An investigation revealed that the issue was with Fastly, a CDN provider. They applied a software update on May 12 that contained a bug that could be triggered by their customers when configuring their services. As a result, a majority of their networks returned errors on that day, causing huge disruptions across the entire web services. Fortunately, the issue was fixed after an hour and all the dependent services resumed.

Deployment Strategy

Data Versioning

Having proper data versioning control is essential. It ensures that you can easily roll back to a previous working state when there is a coding issue that causes significant problems in your application’s functions. You may need to use different CDN instances for both old and new versions to prevent issues, such as a single CDN instance serving the old content from its cache.

Bundling and Minification

You can reduce the load times by decrease the file size of your content via bundling and minification. This is often used for files such as CSS and JavaScript to strip out unnecessary characters without changing the underlying functionality.

File Compression

Besides that, another option is to compress the file before sending over to the CDN providers or clients. This significantly improves the performance due to reduction in file size. Make sure not to overuse it and some CDN providers discourage this technique on compressed format files such as MP3, MP4, ZIP or JPG.

Some CDN providers support only static content via caching, while big CDN providers, such as Azure, can handle both static content (S3 Storage) and dynamic content (S1 Verizon, Akamai S2). Having said that, there is no support for integration with Azure Data Lake as of now.

- Storage and data lakes are two different concepts. Storage refers to file system services on the cloud that store structured and useful data. Instead of storing it locally on-premise, you can upload and save your data on the cloud. Then, you can easily serve it across on your end applications.

- A data lake is a centralized repository that stores all forms of data at any scale. The data can be both structured and unstructured. You can think of it as a dumping ground of raw data. Then, you can access and process the data based on your needs. It is mostly used for big data, real-time analysis, and storing machine learning training data

Conclusion

Let’s recap what you have learned today.

This article started off with a brief introduction of CDNs and the problems faced when serving content via the traditional method. Then, it moved on to the fundamental concepts behind CDN and the advantages it offers.

Finally, it discussed how CDN providers are not immune to disruptions and the differences between different providers when serving both static and dynamic content.